When the headline reads “Power Usage in Wyoming data center could eclipse consumption if the state’s human residents by 5x, the tenant of colossal investment remains a mystery.“ It grabs your attention. The article goes on to discuss that the data center is expected to start at 1.8 gigawatts and potentially expand to 10 gigawatts. The 10GW facility would consume more than double the state’s entire electricity generation each year.

When followed up by an article discussing Pennsylvania disconnecting from the grid due to AI power demands, you start to get concerned.

The Pennsylvania situation is due to the grid not expanding fast enough and needing additional resources to connect renewable projects to the grid. One of the concerns is how fast power prices are rising and the impact on consumers. The article states, “Last year’s annual capacity auction for energy capacity saw prices increase by over 800%, and with an impending auction for this year, those prices are expected to rise again. It’s led to a projected 20% rise in consumer energy prices across Pennsylvania and other PJM-covered territories this summer.” The article states that PJM is also responsible for the power in the Virginia AI corridor, which is expanding at a rapid clip.

Last month, I discussed data center and grid issues, and some of the challenges facing data centers, and how they are now locating where there is power available. This is likely one reason for the Wyoming data center. Wyoming currently exports more than two-thirds of its energy.

In the remainder of this blog, let’s discuss some of the actions data centers are taking to reduce their energy demand footprint and what other potential solutions there may be to reduce power consumption.

Let’s start at the beginning with chips. The Nvidia systems used for AI have increased in transistor count, data performance, and power utilization. The H100 chip has 80 billion transistors, can process 3TB per second, and consumes 700 watts. The Nvidia B200 has 208 billion transistors, operates at 9TB per second, and consumes over 1000 Watts. This is a 3x performance improvement for a 42% increase in power.

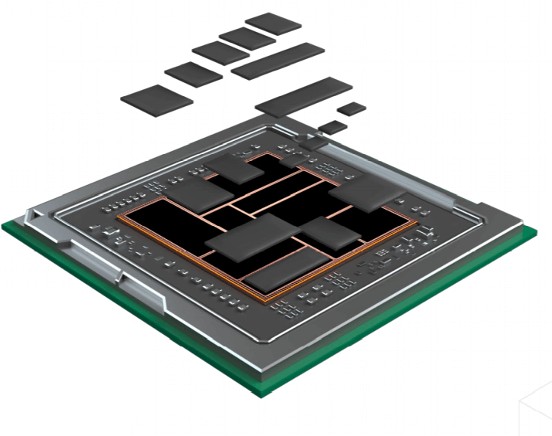

Models can be trained more quickly, and inference answers obtained faster using less power. Chip design, or chiplet design, is another area where power consumption can be lowered. Each new technology node can save power as the transistor dimensions get smaller; however, unlike in earlier technology nodes, if you save power, you have lower performance. Most companies opt for performance.

Moving to backside power at sub-2 nm has the potential to improve power performance by 20-30%. This could be substantial as AI chips shift to this node in a couple of years. Using chiplets to move the SRAM off the SoC and to a separate chip connected to the processor can provide a more direct path to the memory and reduce heat that is generated by routing across the chip.

Advances being made in high bandwidth memory (HBM) can also help to reduce the power consumption of the system. Improvements in moving from ball bonding to hybrid bonding can help to reduce the pitch size and increase the density of the wiring that allows data and power to move more efficiently in the 3D package.

The move to photonics will also be a big energy saver, as the heat generated by using copper to move data can now be reduced by using photonics to transfer data. Photonic use is accelerating in data centers to help reduce power and heat, but also to improve bandwidth. With chiplet technology, chip-to-chip photonics is becoming a possibility, but it is still a way off from implementation.

Lastly, AI is now being used to help in layouts and design for chips and chiplets. It will be interesting to see if newer generation chips designed with AI can improve on the power performance curve, and if something new and different is developed to run AI models.

Data centers are looking for ways to reduce their power usage, especially at peak times. They have been scheduling non-urgent tasks in off-peak hours. This reduces the power draw at peak energy times and helps the grid, as well as power producers, manage and distribute power more easily. This is somewhat like companies and office buildings that use peak power management to reduce energy bills.

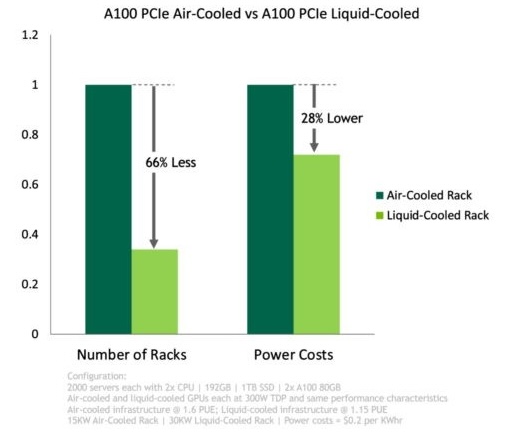

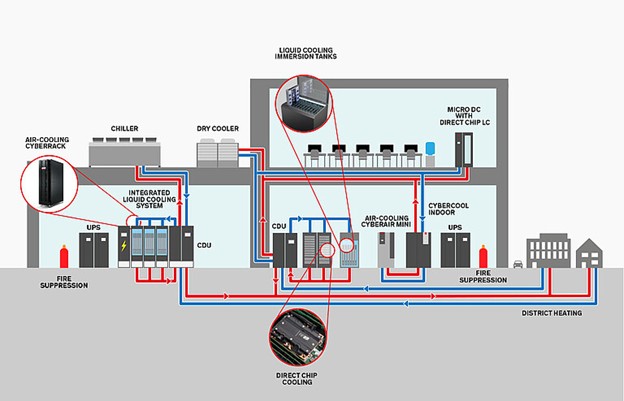

Moving to different types of water cooling can help reduce energy usage for the cooling of data centers. Water cooling can take several different forms. 1) Chip or package cooling where the water meets the package or system. 2) Immersion cooling, where the server is placed into a liquid bath. 3) where water runs through the server stack. In the first 2 examples, the systems become more data efficient as the system runs cooler, so you can improve efficiency by running the system faster.

Data centers are also using their excess heat to help generate power or heat the local neighborhood. This option saves energy in other ways and helps to benefit the local community where the data center is located.

Now, to the models.

While the newer chips can run the AI models faster and more efficiently, the models need to become more efficient in how they are written and operate. According to the Wall Street Journal, some models, such as Alibaba’s Owen 3, are 50% small and as good or superior. This suggests being more efficient and can hopefully reduce power consumption. The new OpenAI open-weight models enable users to customize more easily, which could support reduced power consumption as well.

It’s still early in the AI space, and multiple models are competing for attention and power. Let’s hope we can either build enough power through the many options available, including renewables, to keep the power to consumers at a reasonable cost, or the AI brain trusts figure out a way to implement AI in a more power-efficient manner